Bird's eye view for wheelchair

Background

For my university's capstone project, I was tasked with creating a device

that can help our client in visualizing the surrounding environment while

driving his power wheelchair. Our client suffered from paralysis below the

neck, which meant that his only source of mobility was his power wheelchair.

He specifically needed a device that could assist him in visualizing the

back half of his wheelchair.

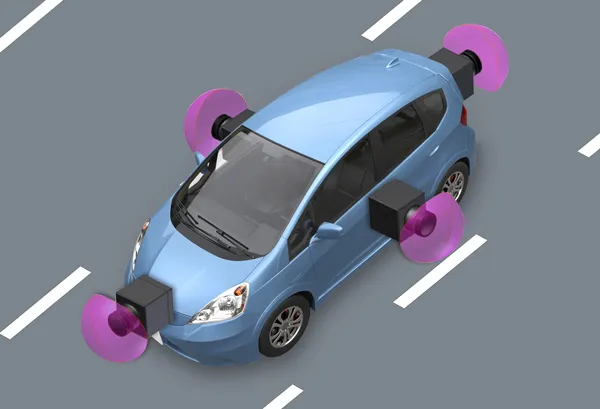

Our team took inspiration from the bird's eye view that some car models

provide. The working principle behind the bird's eye view is to use multiple

wide-angle (fisheye) cameras attached to the sides of the car and

computationally combine all video streams to provide a top-down view of the

car.

Although the system does not look too difficult to implement at first, we

quickly realized that making a system that is both durable and reliable for

long-term use while being attached to a moving wheelchair was extremely

difficult. Thus, we decided to divide the team into different sub teams. I was

in charge of leading the software engineering sub team, which handled the code

behind processing the video frames and combining them into a single bird's eye

view.

The purpose of this blog post is to explain the detailed inner workings of the

GUI application that my sub team developed.

Implementation

Because our client could see in front of him, we decided that installing 3 cameras at the left, right, and back sides of the wheelchair was sufficient.

Gazebo model used for simulation

The image processing pipeline is undistortion --> projection -->

combination. The raw image frames from each camera goes through all three

steps to produce the bird's eye view.

Undistortion

Wide-angle cameras have the benefit of being able to capture a wider field of

view, but they come with the cost of producing heavily distorted images.

Gazebo's camera plugin

Gazebo's wide-angle camera plugin (180 degree field of view)

(The two cameras are the same distance away from the object)

The reason for using a wide-angle camera instead of a pinhole camera, which

has much less distortion, is because pinhole cameras do not provide the field

of view required to make the bird's eye view.

Overlapping fields of view highlighted in red

The fields of view of the cameras need to overlap to produce a continuous view

of the surroundings.

Hence, the first step in the image processing pipeline is undistorting the raw

image frames. This is a fairly simple task using OpenCV's fisheye calibration

module.

Using the cv::findChessboardCorners() function, you can find the corner points

of a chessboard (a corner point is defined as a point of intersection of two

white tiles and two black tiles). The coordinates of these corner points

contain the information about the severity of the distortion and using the

cv::fisheye::calibrate() function, you can find the 4 distortion coefficients

that fully describe the distortion mathematically (details about the math is

at

https://docs.opencv.org/4.x/db/d58/group__calib3d__fisheye.html)

Using the 4 distortion coefficients, cv::fisheye::initUndistortRectifyMap(), and cv::remap(), you can produce the undistorted image frames. Notice that the field of view decreased due to the undistortion process. However, it is still sufficient to produce the bird's eye view.

An important note about computing the distortion coefficients is that the

chessboard must be shown to the camera in as many orientations as possible for

accurate results, which is why I made a moving chessboard in Gazebo.

Projection

The next step in the image processing pipeline is the projecting the image

frames into a top-down view. This means transforming the perspective of the

cameras from a side view into a top-down view. OpenCV provides the functions

cv::getPerspectiveTransform() and cv::warpPerspective() for this specific

task.

The cv::getPerspectiveTransform() function takes in 4 source points and 4

destination points to calculate the 3x3 matrix that describes the intended

perspective transform. The 3x3 matrix can then be fed into the

cv::warpPerspective() function to produce the image frame with transformed

perspective.

Back camera view

Left camera view

Right camera view

Combination

With the projection matrices calculated, the final step is to combine all

projected images into a single bird's eye view. This is a simple process

where you generate a blank image matrix with the specific size that can fit

in all the projected images then drawing the images onto it.

Comments

Post a Comment