Iterative Closest Point Algorithm using Octree (CUDA Accelerated)

Background

Scan matching algorithms are ubiquitous in the field of mobile robotics. Although there are countless variations of the algorithm with each one having its benefits and drawbacks, the overarching goal of the algorithms is the same.

Given two sets of points, the algorithm finds the optimal rigid body transformation (rotation and translation) that aligns one set of points onto the other.

They are specifically useful in localization (estimating the robot's pose)

algorithms as finding the rigid transformation between two consecutive laser

scans from the same robot means finding the accurate change in position and

orientation of the robot.

One of the most commonly used variation of the scan matching algorithm is the

iterative closest point (ICP) algorithm.

ICP takes a 2-step approach to scan matching

- Finding the corresponding pairs of points in the two point sets

- Finding the rigid body transformation

Finding corresponding pairs means finding two points (one in each point set)

that correspond to the same point in space.

It turns out that if the optimal correspondence is known, that is if all

points in one set is matched to their correct pair in the other set, finding

the optimal rigid body transformation is closed form. However, this is

extremely unlikely to occur in real life scenarios as we are trying to match

two laser scans taken from two different points of views.

In most cases, the number of points in the two point sets will not be equal in

the first place, making it impossible to find the optimal correspondence.

To overcome this limitation, the ICP algorithm estimates the correspondence by

choosing the closest point pairs to be the corresponding pairs (a point in set

A is matched to the closest point in set B). This method will obviously fail

to return the optimal rigid body transformation in one try as the

correspondence is not correct. Thus, the algorithm iterates until the error is

under a predefined threshold, hence the name Iterative Closest Point.

Essentially, the problem is turned into a least squares optimization problem.

The summary of the entire algorithm is:

Given two point sets X and P and the goal is to find the transformation that

will align point set P to point set X

- For each point in set P, find the closest point in set X and declare them as a corresponding pair

- Find the optimal transformation for the given correspondence using singular value decomposition

- Apply the transformation to point set P

- Calculate the error

- Iterate steps 1 to 4 until the error is under a predefined threshold

This algorithm has been implemented many time and the biggest performance

bottleneck proved to the be finding the closest points in the two point sets.

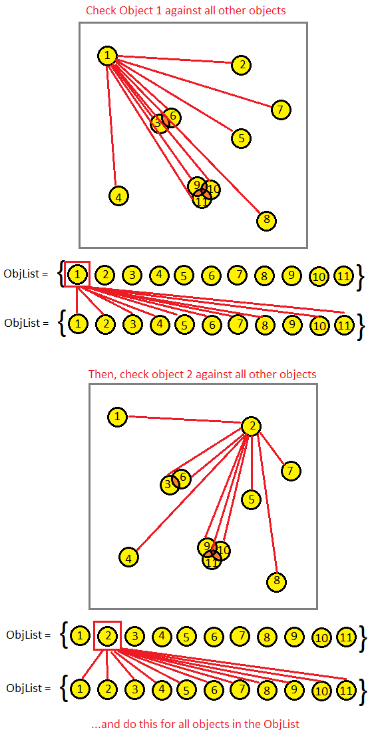

The brute-force way to obtain the following information is to calculate the

distance to every point in set X for each point in set P and selecting the

point pair with the smallest distance.

The approach has a complexity of O(NM), where N is the number of points in set

X and M in the number of points in set P, and it is not feasible for

application in robotics because the number of points in each laser scan can be

extremely large and the algorithm must complete in orders of milliseconds to

be used in practical localization algorithms. In short, optimizations must be

made.

Octree-based Parallel ICP Implementation

To optimize finding the closest point pairs, I employed two methods of

optimization: Octree and Parallel Programming.

Octree is a tree data structure for recursively partitioning a space into 8

smaller regions. It is essentially a multi-dimensional binary search tree.

Each point in set X is stored in the leaf nodes of the tree and searching for

the closest point in set X for a point in set P means traversing down the

octree until a leaf node then performing the brute-force distance comparison.

(this is a quadtree not an octree, but the idea is the exact same)

Although you are doing the brute-force distance comparison at the leaf node,

the number of points to compare is much smaller because you are only comparing

between the points inside the region that the leaf node represents.

The other optimization technique I used was parallel programming. The essence

of parallel programming is to perform computations on multiple threads at

once, thus decreasing the total completion time of the algorithm compared to

the sequential computation method where a single computation is done one after

the other. The GPU is the preferred hardware to perform parallel programming

as it is comprised of much more processing units, though less powerful,

compared to the CPU. For this project, I used Nvidia's CUDA toolkit to make my

code parallel.

However, parallelization comes with the heavy cost of making the code much

more complicated to write and debug. Part of the reason is due to the need for

synchronization. Take for example, summing an array of integers. Coding this

sequentially is extremely easy: iterate through the elements one by one and

add its value to the total. The parallel version on the other hand, is much

more complicated.

A method called parallel reduction must be used where the array is iteratively

divided in half and added to the other half where each addition operation is

done on a individual thread to speed up the process. Between each step labeled

in the diagram, all threads must be synchronized, meaning if a thread finishes

its addition operation faster than the other threads, it must wait until all

threads have completed their addition task before moving onto the next step.

Omitting the synchronization step can lead to all kinds of issues common to

multithreading, such as a race condition.

However, summing up an array of integers is an inherently sequential, which is

why it is much more difficult to make it parallel. The reason I showed this

example is to prove the point that even the simplest algorithms can become

difficult to parallelize if it is not meant to be parallelized. Thus, when

optimizing an algorithm through parallel programming, it is important to first

consider the inherent nature of the algorithm.

Naturally, the algorithms that benefit the most from parallelization are ones

that do not need to be synchronized, which is the case for searching through

an octree. The process of searching the closest point in set X for each point

in set P from an octree is an entirely independent process, meaning the search

operation of one point does not depend on the search operation of another

point, and thus they do not need to be synchronized. Exploiting this

inherently parallel nature of the nearest point search, the process of finding

the closest point for each point in set P can be dramatically optimized.

Optimizing this step is a massive performance boost for the entire ICP

algorithm as it is the performance bottleneck of the algorithm.

Result

3 videos showing the algorithm in motion:

- Red points = target point cloud

- Green points = source point cloud (the transformation is applied to this point cloud)

- Blue lines = nearest neighbor correspondence

Showing the algorithm in slowed down and showing the correspondence lines:

Full speed of the algorithm without the correspondence lines (actually this is

slowed down too because the visualization software that I used (RViz) lagged

when it was run at full speed)

Actual full speed footage:

Comments

Post a Comment